A modest desire

The red pyramid in this image is a custom handle (3D) that you can grab, drag, and move around the screen. When it touches another GameObject, it finds any Sockets on that object, and attempts to rotate + move + scale the first object so that they connect together, like magnets.

…these things are so useful I quickly end up adding many to each GameObject. I have different sizes and “types” (type A can only connect to other type A, etc) – each with a different mesh (Pyramid, Cube, Sphere, squiggle … whatever). But Unity has a weak Hierarchy View, and it’s very hard to see which is which:

This is a 5+ years old problem in Unity. Their API for is private, making it hard for 3rd parties to fix it. I’ve hacked bits of it myself, but the hacks have often broken in successive versions of Unity, and need constant refreshing – they are not a robust solution.

So, I wanted to make a Custom Inspector (Unity allows these to be customized, and gives full control to the developer, although the API’s for it are poor) that would render each and give a preview icon of the custom meshes (where the white squares are in this image):

Summary: Display a thumbnail of the mesh for each object, in the Inspector

This is a 3D engine, with a 2D mode (which is still 3D, but has hundreds of extra classes to facilitate 2D game dev). It was customizable almost from Day 0, and “customization” is one of the biggest current selling points – c.f. the Asset Store, from which Unity makes vast amounts of money.

So: we expect that “render a simple mesh and display it next to the text saying the object’s name” should be easy, right?

Let’s go on a little journey…

Problem 1: How to make that pyramid?

Unity does not allow developers to make clickable 3D objects in the Editor.

There are a bunch of ugly, pre-made 2D only “handles” you are given, and one of them can be customized (through a torturous process of specifying matrices) into making arbitrary rectangles appear in 2D, with no lighting, and breaking the 3D positions of the Scene.

I was using that up until now. It’s ugly, but it works fine. Surprisingly, there’s not even a “drawTriangle” command. The common workaround seems to be: find the largely undocumented Graphics.DrawMesh…() methods, use trial-and-error to discover that one of them (only one!) works, and use that to draw a Mesh here.

Problem 2: How is that pyramid clickable?

I got the mesh drawing. This took a while … but what’ll really flubble your goat is when you try to make it clickable, draggable. As far as I can tell, Unity has no support for clickable meshes or objects.

There’s huge support in-game, but in-Editor it vaniehs. Their click-detection is almost entirely physics-based, but in the Editor they have to disable the Physics engine, otherwise editing would be a nightmare. Imagine: you’d try to move things, and the physics would bounce them back, or block them, or send them flying.

Some of physics is enabled – the basic collision-detection. But you cannot use this to do Editor features: if you did, the physics for the editor features would interfere with the physics for the game, or become part of it. Unity does not currently allow running multiple physics simulations in parallel. IIRC it’s a feature of the middleware they’re using, but they’ve disabled that feature.

The basic elements of interaction in 3D are therefore mostly missing in Editor: Collision detection, Mouse over, Mouse clicks, etc. There are some old bits of legacy code for some of these, but they’ve not been maintained in many years, and are no longer (or never were) included in the main documentation.

Back to Basics: Problem 2.1: Unity has no Triangle class

Workaround: create a “has the mouse clicked this triangle?” routine, and apply it to every triangle in the mesh. Use this to build-up a working “click and drag a mesh” routine.

Obviously, being a 3D engine, Unity will have a method “Triangle.ContainsPoint( Vector2 )”. Right?

Wrong. You have to write your own. Or use Google – unfortunately the first two I tried had bugs (which is disappointing; this is a very well-known/solved problem in Computer Graphics). I fixed the maths, did some tweaking, and it worked fine.

OK. Now we have a mesh that renders in Editor, and is clickable and draggable in Editor. Finally we can add the preview to the Inspector.

Problem 3: Unity’s “click mouse button” API is undocumented

Now you hit problems with mouse control. Unity has a good (albeit non-extensible) system for this – but the smart person who wrote it didn’t bother documenting how it works, and it’s non-trivial. Without documentation, almost no-one knows how to use it, and after many attempts, most give up and use something lower quality they hack together. c.f. any Google search on “Unity scene view mouse clicks”.

It took me months to reverse-engineer it, partly by decompiling the Unity libraries and partly through intelligent guess work based on previous game engines I worked on. I’ve met a few others who’ve done the same, but none who found it especially quick or easy.

Since 2015, some of this system is now pseudo documented, e.g. via some good Unity blog posts. But those aren’t linked-to from anywhere, and you have to work quite hard to find them (I don’t have the links any more; when I tried a quick search to find them, I drew a blank).

Problem 4: Unity’s mousePosition.y has been broken for 5 years

This core feature – the y-position of the mouse – has been broken for so long that everyone has now hardcoded their games to workaround it. I suspect Unity has decided: “We cannot fix it, it MUST remain broken.”

IMHO it would be fairly easy to fix: create a new global API call that returns the mouse-position (NB: There is no such API call in Unity today; there are two obscure calls in different places, one that only works in game, one that works in game AND in editor, and they return incompatible values).

Workaround: take the y-position, multiply by -1, add it to the Sceneview height. But not the camera’s height (as advised by most websites and forum posts) – Sceneview has a magic height that is 5 to 25 pixels different, and it varies over time from the Scene View Camera height. Hmm.

Problem 5: Unity has no GUI system for the Editor

After 2 years of promising a modern GUI API, Unity finally published (almost 2 years ago) a reasonable new GUI by hiring the author of the main 3rd party GUI-replacement for Unity.

A few years ago, Unity crop rebranded the nasty, end-of-life, old GUI as “the legacy GUI” and brushed it under the carpet (with good reason). Sadly, despite the marketing department’s claims, it’s very much not legacy: it’s the only GUI you can use in Editor.

Problem 6: I already drew a mesh; can’t I do the same again?

I said the Graphics.DrawMesh…() methods were undocumented, and only one works (in that context). But when you try to use them in the Inspector, they both silently fail.

Since there’s no documentation, there’s no way of knowing if this is hardcoded, or if it does in fact work, but requires a secret method call first, or a pre-multiplication on your arguments, or … or … etc. Something that only Unity staff know about.

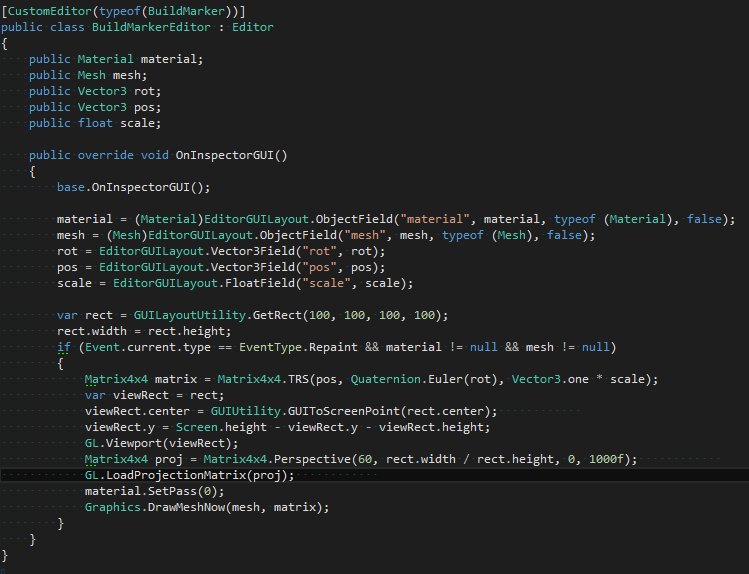

UPDATE: Sampsa sent me some code on twitter which gets DrawMeshNow working using some GL calls:

Problem 7: Unity often blocks/disables procedural content

BUT WAIT!

There is a library call for rendering thumbnails of meshes, and it is used widely throughout Unity.

Ah, except … As a developer, you are blocked from using it on user-generated content.

You are also banned on using it on content generated by scripts: you can create a Mesh in script, but you can’t use this library call on it. The library call is hardcoded to only work on things that are compiled as part of the Build.

If I were to make a bad Plugin, that could only use a pre-made set of meshes, this would be fine [*]. But I want users to make custom meshes (that are easier to use with their own games) and scripted-meshes (that adapt based on context, making it many times easier to build large, complex games), etc.

I hit this limitation of Unity depressingly often. Many core features of Unity have the same or similar restriction: they are disabled both for runtime (procedural content in-game) and for developers working live with procedural gen (who do many things in script).

[*] = (actually, it wouldn’t be fine. It would work, but still be a little ugly, because Unity doesn’t let plugin authors bundle their meshes. My meshes would pollute every game-project that used them. You see this a lot in plugins, random things dumped into your project, because the plugin author cannot stop Unity from spamming your project with them)

…at which point, I gave up and wrote this blog post

I’ve decided I’ll do what most people do:

- Give in.

- Make my Plugin worse (delete features and code I’d already implemented)

- …so that it’ll work with Unity

When the next version of Snap-and-Plug goes live, and meshes can’t be customized in code, and can’t be reactive … you’ll know why.

Conclusion: I hate extending Unity

You’ll read this, be glad it wasn’t you that wasted hours banging your head against these brick walls, and move on. I have now battered through the pain, and I’ve decided on my workaround.

But next time you buy a Unity Asset Store plugin, and find yourself disappointed that it crashes, or works strangely, or doesn’t have a great GUI … instead of hating on the author(s), consider this: maybe – just maybe – it’s not their fault. Unity has a lot of work to do in cleaning-up their Editor/Plugin/Developer API’s…

2 replies on “Trying to paint an in-editor Mesh in #unity3d: harder than you’d expect”

With the amount of headaches that it causes you, why do you stick with Unity3D?

Good question; I’m looking-out for an excuse to write an equivalent post about what makes you love Unity. Watch this space..